The Hidden Variable in the AI Wars

Why intimacy might be more important than intelligence for OpenAI and Meta

Dear reader,

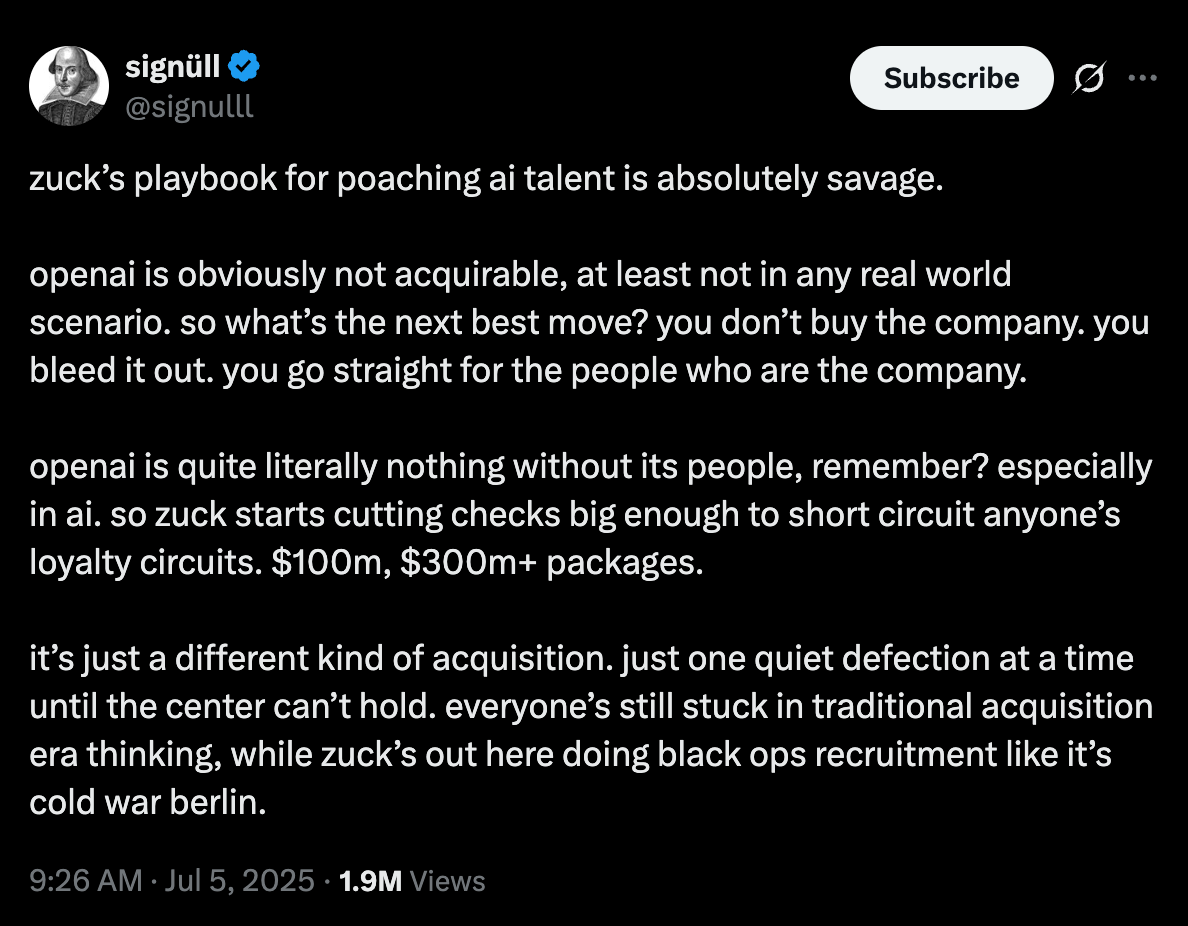

In what has been perhaps the most thrilling arms race between two parties since the Cuban Missile Crisis, Mark Zuckerberg has been dangling a golden carrot on a stick in front of OpenAI’s top researchers and scientists in a bid to finally place Meta in a serious position in the AI lab race.

Let’s zoom out to understand how we got here. OpenAI makes great models. You and I know this—we both use them all the time. Meta, so far, has not made great models. Have you tried Llama lately? Perhaps through a WhatsApp group chat that tags it to ask silly questions or through an inadvertent click on Instagram search? It’s not the best. Llama 4’s launch was largely a disappointment, with rumors swirling around inflated benchmarks and middling performance for what was supposed to be the company’s answer to match OpenAI, Anthropic, and perhaps more importantly, open-source rival DeepSeek. Mark Zuckerberg has been laser focused on making up ground.

Meta recently made headlines for their blockbuster “acquisition” of 49% of Scale AI for over $14B (really, the main asset being acquired is CEO Alexandr Wang, who may now be the most expensive human of all time? Up for debate in the comments). Zuckerberg even tried to acquire Ilya Sutskever’s company Safe Superintelligence, and succeeded in hiring SSI’s now-ex-CEO Daniel Gross after Sutskever turned down an acquisition.

They haven’t stopped there. Sam Altman recently claimed on a podcast that Meta was offering signing bonuses of up to $100 million for AI researchers to jump ship (an excellent move, by the way—anyone receiving less than that now feels stiffed). So far, a few have.

“[Meta has] started making these, like, giant offers to a lot of people on our team. You know, like, $100 million signing bonuses, more than that [in] compensation per year. I’m really happy that, at least so far, none of our best people have decided to take him up on that.”

Sam Altman on Uncapped with Jack Altman. Listen here

Whether that last part is true—that OpenAI has retained its best people—remains to be seen. We see in the table above that a good portion of Meta’s latest hires come from OpenAI; the list even excludes the Zurich-based OpenAI team that Meta recently nabbed.

AI labs are only as good as the talent behind them. As Meta poaches more and more key OpenAI researchers, those researchers themselves can inform more hires based on who they’ve worked with. A future in which Meta competes with OpenAI, Anthropic, and Google on state-of-the-art models suddenly becomes more feasible.

Will Meta actually outcompete them? We’ll see. It remains to be seen how many step-change improvements in AI intelligence there will even be in the next few months. But I think there’s a second, more important, variable that will determine who actually wins over the consumer in this race.

Intimacy.

Let me define that term in context. Intimacy refers to how close and integrated artificial intelligence is with the user: how ingrained it is in your daily life, your workflows, your time on the internet, your interaction with technology.

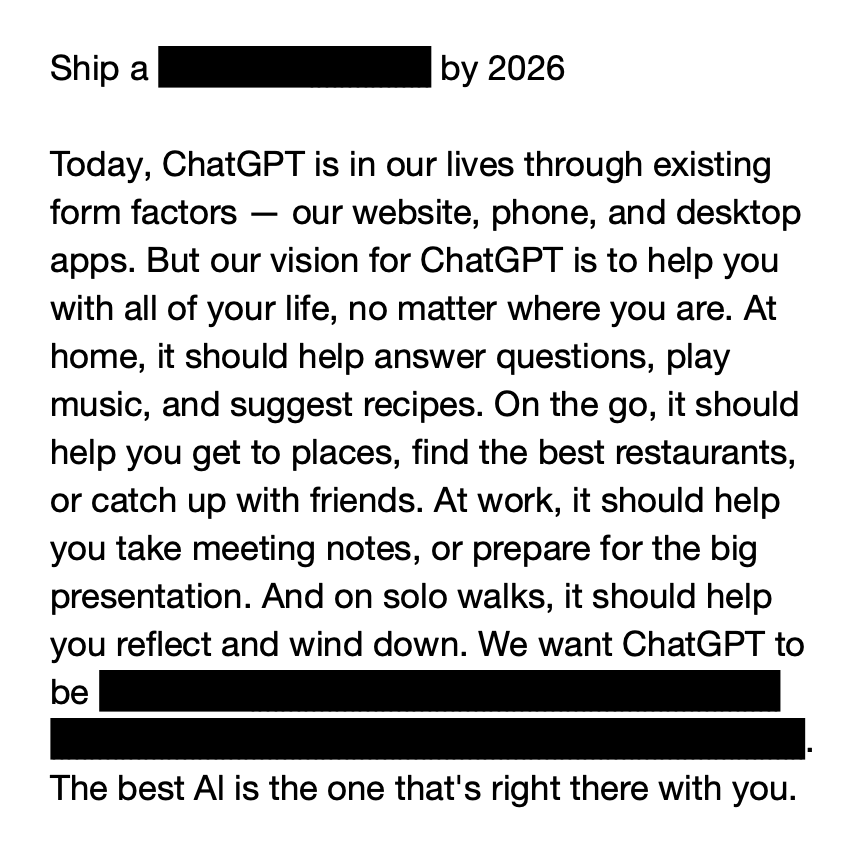

Intimacy is why Sam Altman partnered with Jony Ive to make an AI-native hardware device: the best AI is the one that’s right there with you. Listening, learning, adapting, always ready, always available. An omnipresent friend, assistant, helper, and fixer.

Intimacy, or the lack thereof, is why—despite having the most capable model—Google’s AI has so far failed to achieve the same mainstream cultural relevance that ChatGPT (or even Claude and Grok for that matter) has. Google makes productivity tools, and Gemini integrates well with them. But productivity tools are not intimate. Gemini struggles to escape the workspace and enter the personal space.

”Ask Chat” or ”ChatGPT wrote this” or ”This smells like ChatGPT”—no one has ever heard anyone say “OMG Gemini totally wrote this.”

So far, OpenAI with ChatGPT is crushing the intimacy war. People are telling it secrets, developing friendships, analyzing relationships,and talking to it like a therapist. The graph below is from a Harvard poll on generative AI usage. Note how the top three queries in 2025 now relate to personal use cases as opposed to professional use cases. AI has not just become intimately ingrained into the knowledge work that we do but the emotional aspect of our lives as well.

No one else is better positioned to steal OpenAI’s consumer mindshare through properly integrated intimacy than the largest social networking company to ever exist. What better way to integrate into emotional and social contexts than in the very digital spaces where these contexts occur? Your friends, followers, dreams, interests, thoughts, all become a part of a digital profile that an AI can access.

A key factor in creating intimacy with AI is creating an AI that understands you, so that every conversation is tailored to you specifically without you having to reintroduce concepts and ideas about yourself (e.g. I’m a college student, I’m a young man, etc.). OpenAI and xAI have launched memory features to help provide a more personalized experience, but the memory draws only from conversations held with the chatbot. What if your AI coworker, friend, therapist, knew everything about you?

To appreciate the scale of what Meta could build, consider what it already owns: the social graph of half the planet. Meta has nearly 3.5 billion active users across its platforms. Almost every other person. That is at least three times as many active users as ChatGPT. Meta knows your friends, your group chats, your habits, and your interests. They have an opportunity to embed their intelligence into a user’s online social flows, bringing AI outside of a secular chatbot and placing it inside your life. Half of the world will have frictionless access to an AI friend, confidant, therapist, and co-conspirator every time they open Instagram, WhatsApp, or Facebook.

It probably won’t matter who’s marginally smarter on benchmarks. If you trust an AI enough to let it into your life, and maybe, without realizing, your soul, the company who controls it will be the most powerful company in the world.

Really, really interesting take. I was struggling to locate the upshot / "so what" of the OpenAI Zuckings but your article makes it pretty crystal. I still wonder if this knocks down a new threshold to your average, run-of-the-mill T&Cs for the LCD of social media users - the "I clicked without reading and now I'm suing" contingent. Should data collection become more "intimate" (qua "invasive") those people (really all of us...) are in for a rude awakening.

Great read. Been thinking about this a lot. Second order effects of artificial intimacy prioritization are a little concerning, particularly for those that use it less as an impersonal tool and more as a substitute for human interaction